|

Gu Zhang I am a first-year Ph.D. student in Computer Science at Institute for Interdisciplinary Information Sciences (IIIS), Tsinghua University under the supervision of Prof. Huazhe Xu. I obtained my bachelor's degree from Shanghai Jiao Tong University (SJTU) with GPA ranking 1/88 and won the Best Bachelor Thesis Award. My research interest mainly focuses on robotic manipulation, aiming to develop robots equipped with generalizable, versatile and robust manipulation capabilities. I am also interested in tactile sensing and human-robot interaction. During my undergraduate study, I am fortunate to be mentored by Prof. Cewu Lu and Prof. Junchi Yan. I am also a research visiting student/visitor at Massachusetts Institute of Technology and MIT-IBM Watson AI Lab under the supervision of Prof. Josh Tenenbaum and Prof. Chuang Gan. Email / Google Scholar / Github / WeChat / Twitter / |

|

News |

|

Publications* indicates equal contributions, † indicates equal advising. Representative papers are highlighted. |

|

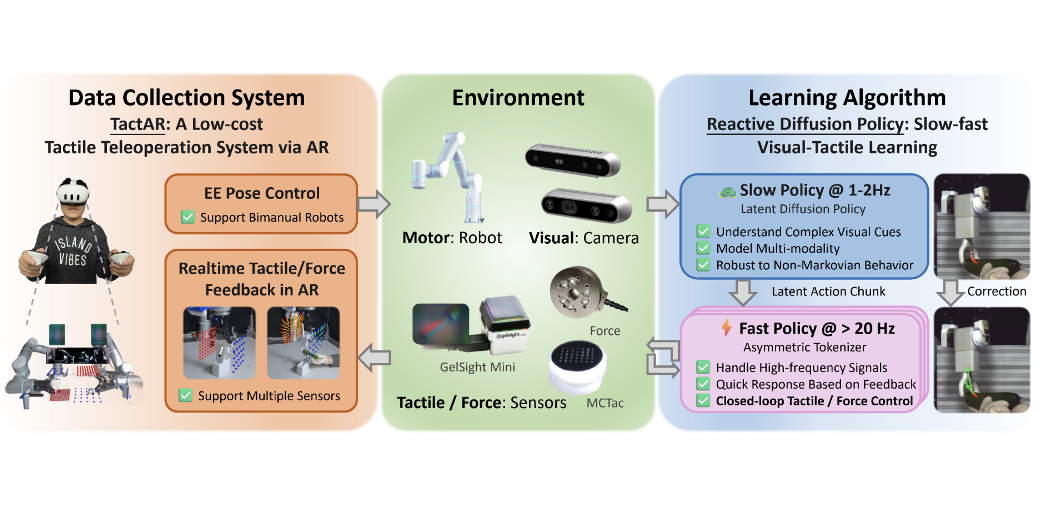

Reactive Diffusion Policy: Slow-Fast Visual-Tactile Policy Learning for Contact-Rich Manipulation

Han Xue*, Jieji Ren*, Wendi Chen*, Gu Zhang, Yuan Fang, Guoying Gu, Huazhe Xu† and Cewu Lu† Best Student Paper Finalist, Robotics: Science and Systems (RSS), 2025 Best Paper Award, ICRA Beyond Pick and Place Worshop, 2025 project page / paper / twitter In this paper, we introduce TactAR, a low-cost teleoperation system that provides real-time tactile feedback through Augmented Reality (AR), along with Reactive Diffusion Policy (RDP), a novel slow-fast visual-tactile imitation learning algorithm for learning contact-rich manipulation skills. |

|

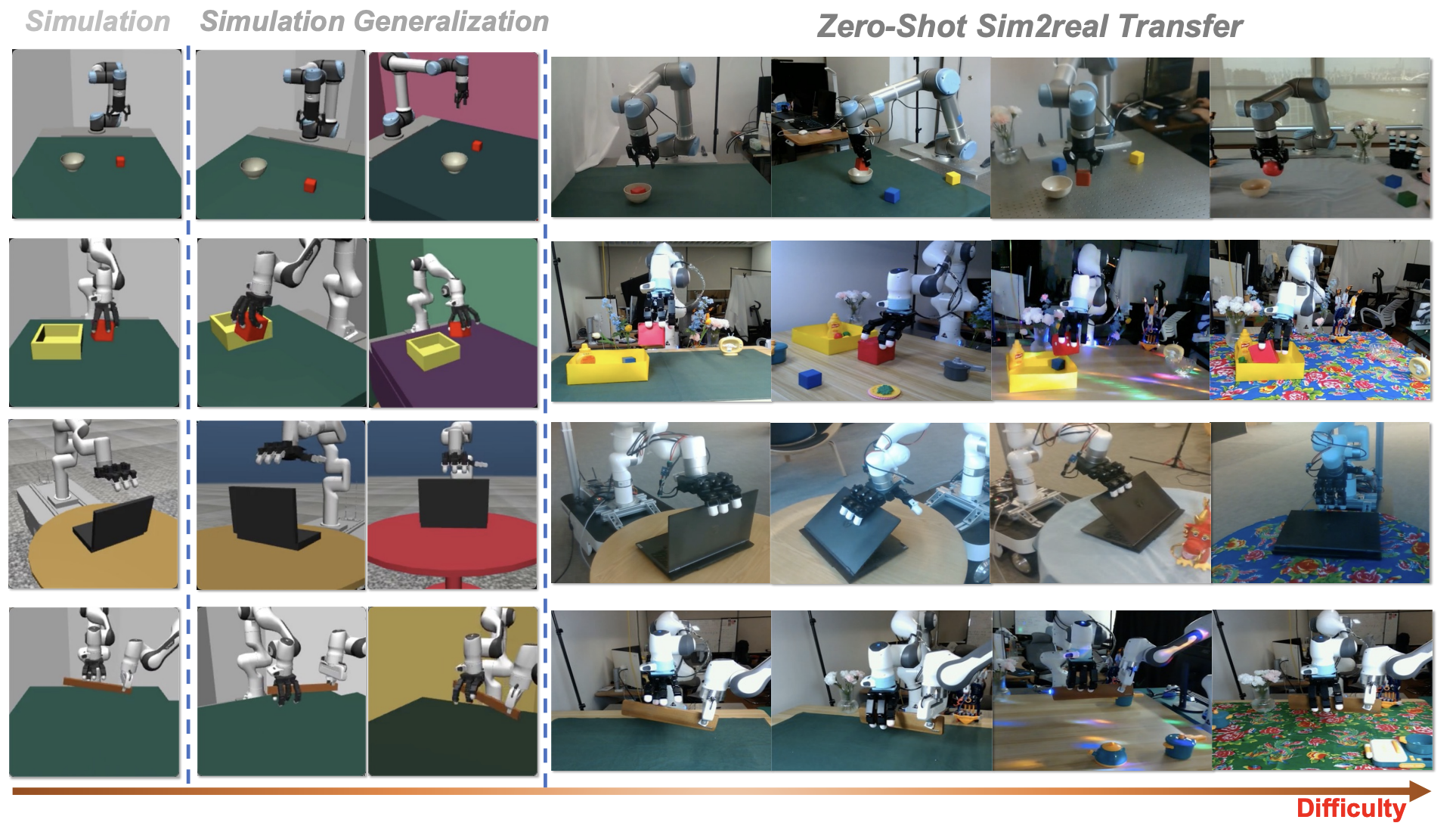

Learning to Manipulate Anywhere: A Visual Generalizable Framework For Reinforcement Learning

Zhecheng Yuan*, Tianming Wei*, Shuiqi Cheng, Gu Zhang, Yuanpei Chen, and Huazhe Xu Conference on Robot Learning(CoRL), 2024 project page / paper / twitter In this paper, we propose Maniwhere, a generalizable framework tailored for visual reinforcement learning, enabling the trained robot policies to generalize across a combination of multiple visual disturbance types. |

|

3D Diffusion Policy: Generalizable Visuomotor Policy Learning via Simple 3D Representations

Yanjie Ze*, Gu Zhang*, Kangning Zhang, Chenyuan Hu, Muhan Wang and Huazhe Xu Robotics: Science and Systems (RSS), 2024 project page / paper / code / twitter In this paper, we present 3D Diffusion Policy (DP3), a novel visual imitation learning approach that incorporates the power of 3D visual representations into diffusion policies, a class of conditional action generative models. |

|

DIFFTACTILE: A Physics-based Differentiable Tactile Simulator for Contact-rich Robotic Manipulation

Zilin Si*, Gu Zhang*, Qingwei Ben*, Branden Romero, Xian Zhou, Chao Liu and Chuang Gan International Conference on Learning Representations (ICLR), 2024 Best Paper Award, RSS Noosphere (Tactile Sensing) Worshop, 2024 project page / paper / code / twitter In this paper, we introduce DIFFTACTILE, a physics-based and fully differentiable tactile simulation system designed to enhance robotic manipulation with dense and physically-accurate tactile feedback. |

|

Thin-Shell Object Manipulations With Differentiable Physics Simulations

Yian Wang*, Juntian Zheng*, Zhehuan Chen, Xian Zhou, Gu Zhang, Chao Liu, and Chuang Gan International Conference on Learning Representations (ICLR), 2024 [Spotlight] project page / paper / code In this paper, we introduce ThinShellLab, a fully differentiable simulation platform tailored for diverse thin-shell material interactions with varying properties. |

|

Robo-ABC: Affordance Generalization Beyond Categories via Semantic Correspondence for Robot Manipulation

Yuanchen Ju*, Kaizhe Hu*, Guowei Zhang, Gu Zhang, Mingrun Jiang, and Huazhe Xu European Conference on Computer Vision (ECCV), 2024 project page / paper / code In this paper, we present Robo-ABC, a framework through which robots can generalize to manipulate out-of-category objects in a zero-shot manner without any manual annotation, additional training, part segmentation, pre-coded knowledge, or viewpoint restrictions. |

|

ArrayBot: Reinforcement Learning for Generalizable Distributed Manipulation through Touch

Zhengrong Xue*, Han Zhang*, Jingwen Chen, Zhengmao He, Yuanchen Ju, Changyi Lin, Gu Zhang, and Huazhe Xu International Conference on Robotics and Automation(ICRA), 2024 project page / paper / code In this paper, we present ArrayBot, a distributed manipulation system consisting of a 16×16 array of vertically sliding pillars integrated with tactile sensors, which can simultaneously support, perceive, and manipulate the tabletop objects. |

|

Flexible Handover with Real-Time Robust Dynamic Grasp Trajectory Generation

Gu Zhang, Hao-shu Fang, Hongjie Fang and Cewu Lu IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023 [Oral] paper In this paper, we propose an approach for effective and robust flexible handover, which enables the robot to grasp moving objects with flexible motion trajectories with a high success rate. |

|

Understanding and Generalizing Contrastive Learning from the Inverse Optimal Transport Perspective

Liangliang Shi, Gu Zhang, Haoyu Zhen, and Junchi Yan International Conference on Machine Learning (ICML), 2023 paper In this paper, we aim to understand CL with a collective point set matching perspective and formulate CL as a form of inverse optimal transport (IOT). |

|

Relative Entropic Optimal Transport: a (Prior-aware) Matching Perspective to (Unbalanced) Classification

Liangliang Shi, Haoyu Zhen, Gu Zhang, and Junchi Yan Conference on Neural Information Processing Systems (NeurIPS), 2023 paper In this paper, we propose a new variant of optimal transport, called Relative Entropic Optimal Transport (RE-OT) and verify its effectiveness for inhancing visual learning. |

Selected Awards and Honors |

|

Service |

|

|

Design and source code from Jon Barron's website. My profile photo was taken by Ziming Tan. |